Evaluate Model

Learn how to evaluate your model on the test data and submit them to the leaderboard. Local evaluation is not possible since model weights are encrypted and cannot be downloaded.

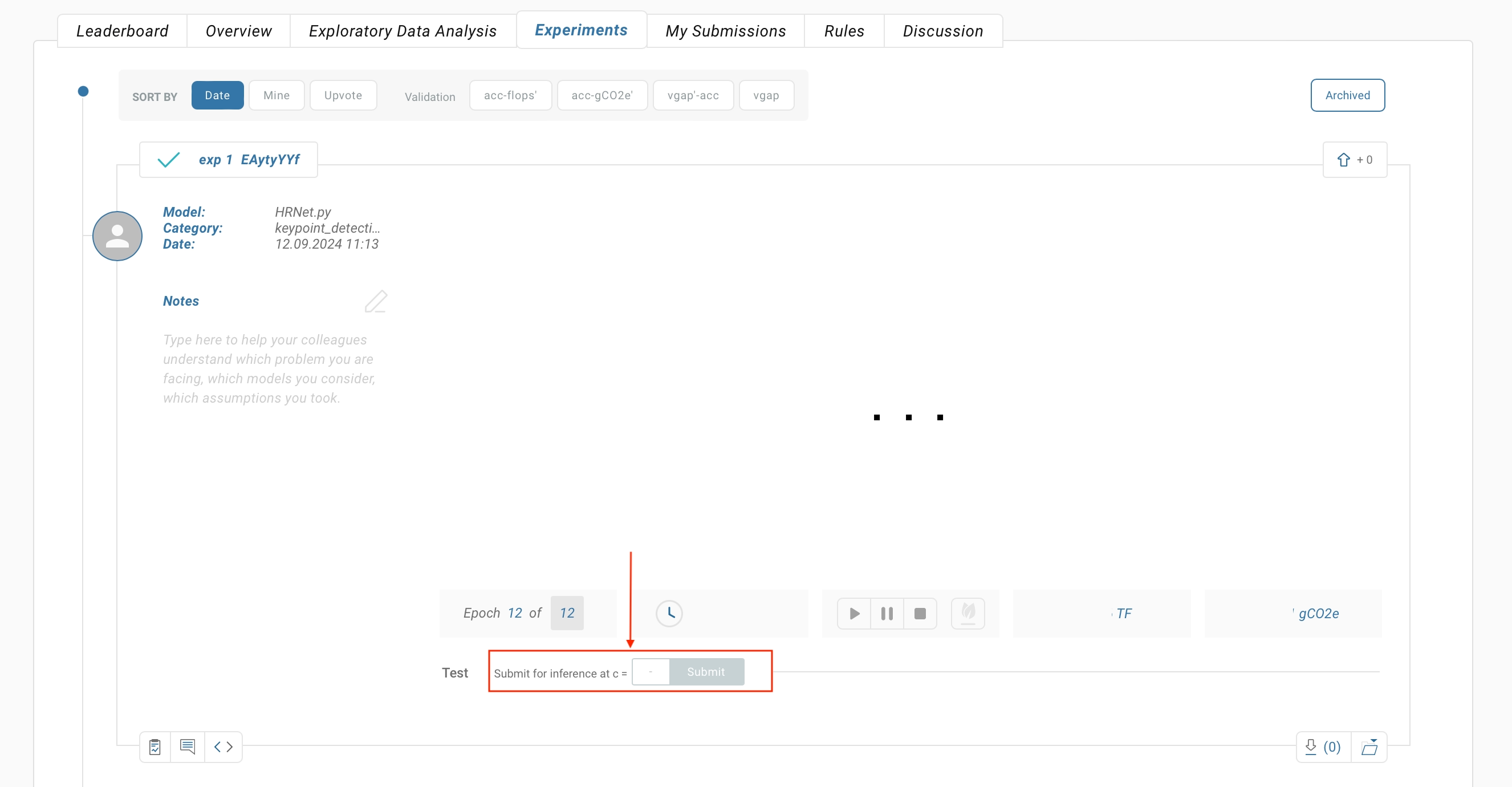

Submit to Leaderboard

- Select a promising training cycle for evaluation

- Click "Submit" to start inference on the test dataset

- View your model's performance on the leaderboard tab once complete

Note: Track your team's remaining daily submissions at the top of the use case page. The number of submissions is restricted to prevent overfitting on the test dataset.

Leaderboard Rankings

Navigate to the Leaderboard section of your use case (e.g., Breast Cancer Screening Use Case) to compare submitted models.

Vendors are ranked by their best performing model score on the test data. Compare:

- Final model score on test data

- Model size

- Energy consumption

- Number of submissions

- Remaining compute budget per team

Need Help?

- Email us at support@tracebloc.io

- Visit docs.tracebloc.io.